when we use huge flat file on lookup, what ye of cache we have to use

In the previous blog of Top Informatica Interview Questions Y'all Must Prepare For In 2021, we went through all the important questions which are frequently asked in Informatica Interviews. Lets take further deep dive into the Informatica Interview question and sympathise what are the typical scenario based questions that are asked in the Informatica Interviews.

In the 24-hour interval and age of Large Data, success of whatsoever company depends on data-driven decision making and business concern processes. In such a scenario, data integration is critical to the success formula of any business and mastery of an end-to-end agile information integration platform such as Informatica Powercenter nine.Ten is sure to put yous on the fast-rail to career growth. There has never been a better time to get started on a career in ETL and data mining using Informatica PowerCenter Designer.

Go through this Edureka video delivered past our Informatica certification grooming expert which will explain what does it accept to land a task in Informatica.

Informatica Interview Questions and Answers for 2022 | Edureka

If yous are exploring a job opportunity effectually Informatica, look no further than this blog to prepare for your interview. Hither is an exhaustive list of scenario-based Informatica interview questions that will help you crevice your Informatica interview. However, if you have already taken an Informatica interview, or have more questions, we encourage you to add together them in the comments tab beneath.

Informatica Interview Questions (Scenario-Based):

ane. Differentiate betwixt Source Qualifier and Filter Transformation?

| Source Qualifier Transformation | Filter Transformation |

| one. It filters rows while reading the information from a source. | 1. Information technology filters rows from within a mapped data. |

| 2. Can filter rows only from relational sources. | 2. Tin filter rows from any type of source system. |

| 3. It limits the row sets extracted from a source. | 3. Information technology limits the row set sent to a target. |

| 4. It enhances operation by minimizing the number of rows used in mapping. | 4. It is added close to the source to filter out the unwanted data early and maximize performance. |

| 5. In this, filter condition uses the standard SQL to execute in the database. | 5. It defines a status using any statement or transformation function to get either True or FALSE. |

2. How do you remove Duplicate records in Informatica? And how many ways are there to do it?

In that location are several ways to remove duplicates.

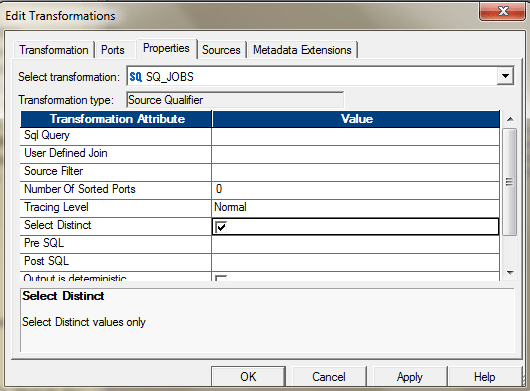

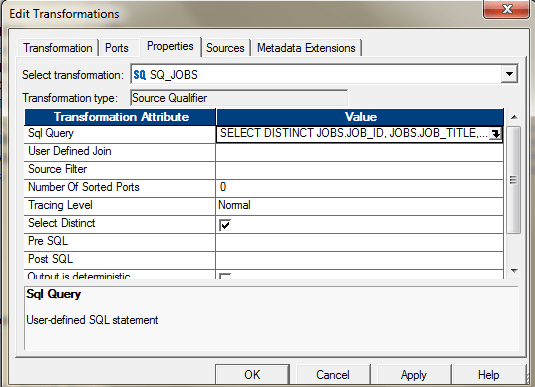

- If the source is DBMS, you tin use the belongings in Source Qualifier to select the distinct records.

Or you can also use the SQL Override to perform the aforementioned.

Or you can also use the SQL Override to perform the aforementioned.

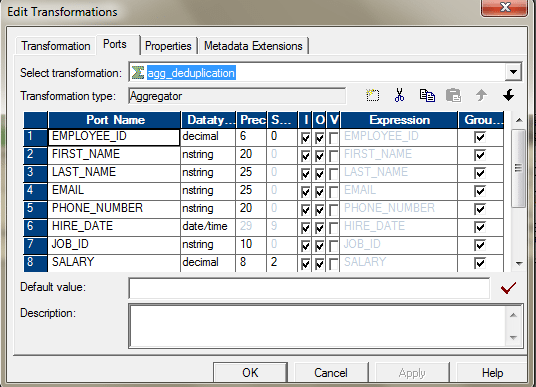

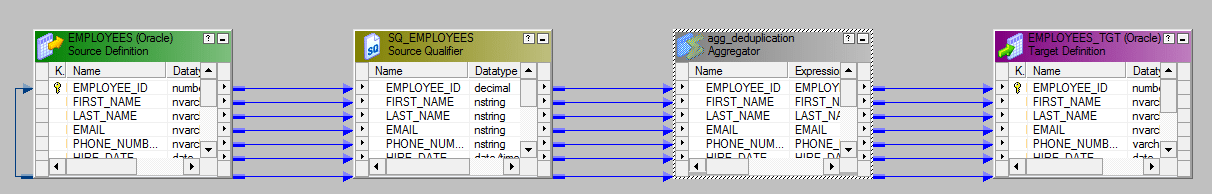

- You can apply, Aggregator and select all the ports as key to go the distinct values. After you pass all the required ports to the Aggregator, select all those ports , those yous need to select for de-duplication. If yous want to observe the duplicates based on the unabridged columns, select all the ports as group by central.

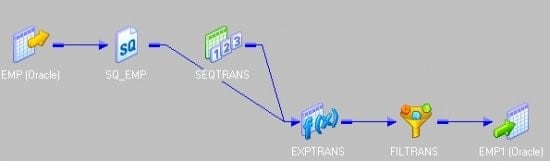

The Mapping volition expect similar this.

The Mapping volition expect similar this.

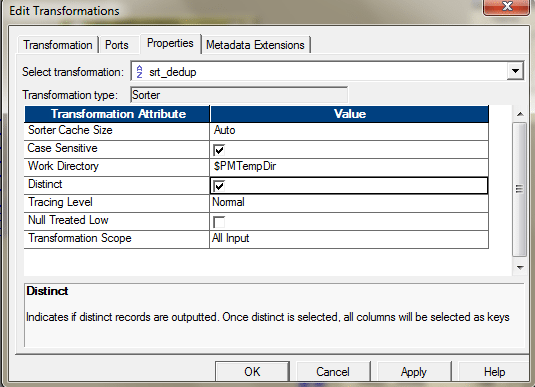

- Yous tin can use Sorter and apply the Sort Distinct Property to become the distinct values. Configure the sorter in the following way to enable this.

- Yous tin employ, Expression and Filter transformation, to identify and remove duplicate if your information is sorted. If your information is not sorted, so, you may start use a sorter to sort the data and and then utilise this logic:

5. When you change the holding of the Lookup transformation to utilise the Dynamic Enshroud, a new port is added to the transformation. NewLookupRow.

The Dynamic Enshroud can update the cache, as and when it is reading the information.

If the source has duplicate records, you tin can also utilize Dynamic Lookup cache and then router to select only the distinct one.

3. What are the differences betwixt Source Qualifier and Joiner Transformation?

The Source Qualifier can join data originating from the aforementioned source database. We can join two or more tables with primary key-foreign key relationships by linking the sources to one Source Qualifier transformation.

If we have a requirement to join the mid-stream or the sources are heterogeneous, then nosotros will have to use the Joiner transformation to join the data.

4. Differentiate between joiner and Lookup Transformation.

Below are the differences between lookup and joiner transformation:

- In lookup we can override the query just in joiner we cannot.

- In lookup we can provide unlike types of operators like – ">,<,>=,<=,!=" only, in joiner only "= " (equal to )operator is available.

- In lookup we tin restrict the number of rows while reading the relational table using lookup override merely, in joiner we cannot restrict the number of rows while reading.

- In joiner we tin bring together the tables based on- Normal Bring together, Principal Outer, Item Outer and Full Outer Join but, in lookup this facility is not available .Lookup behaves like Left Outer Join of database.

five. What is meant by Lookup Transformation? Explain the types of Lookup transformation.

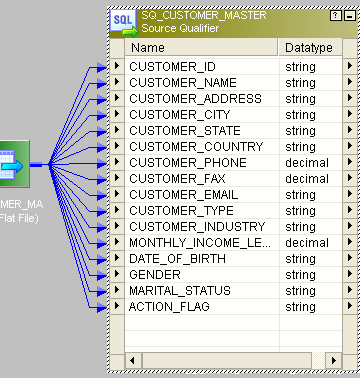

Lookup transformation in a mapping is used to await up information in a flat file, relational table, view, or synonym. Nosotros can also create a lookup definition from a source qualifier.

Nosotros have the post-obit types of Lookup.

- Relational or flat file lookup. To perform a lookup on a flat file or a relational table.

- Pipeline lookup. To perform a lookup on application sources such equally JMS or MSMQ.

- Connected or unconnected lookup.

- A connected Lookup transformation receives source data, performs a lookup, and returns data to the pipeline.

- An unconnected Lookup transformation is not connected to a source or target. A transformation in the pipeline calls the Lookup transformation with a: LKP expression. The unconnected Lookup transformation returns ane column to the calling transformation.

- Cached or un-cached lookup.We can configure the lookup transformation to Cache the lookup information or directly query the lookup source every time the lookup is invoked. If the Lookup source is Flat file, the lookup is always cached.

6. How can you increment the performance in joiner transformation?

Below are the means in which you can improve the operation of Joiner Transformation.

- Perform joins in a database when possible.

In some cases, this is not possible, such as joining tables from ii different databases or apartment file systems. To perform a join in a database, we tin can use the post-obit options:

Create and Use a pre-session stored procedure to join the tables in a database.

Use the Source Qualifier transformation to perform the join.

- Join sorted data when possible

- For an unsorted Joiner transformation, designate the source with fewer rows as the master source.

- For a sorted Joiner transformation, designate the source with fewer indistinguishable key values every bit the master source.

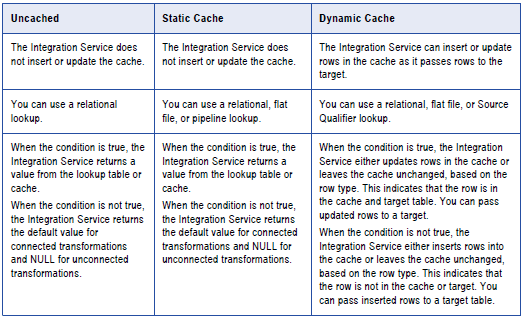

seven. What are the types of Caches in lookup? Explain them.

Based on the configurations done at lookup transformation/Session Property level, we can accept post-obit types of Lookup Caches.

- United nations- cached lookup– Here, the lookup transformation does not create the cache. For each record, information technology goes to the lookup Source, performs the lookup and returns value. And so for 10K rows, it will get the Lookup source 10K times to get the related values.

- Cached Lookup– In order to reduce the to and fro communication with the Lookup Source and Informatica Server, we tin configure the lookup transformation to create the cache. In this manner, the entire data from the Lookup Source is cached and all lookups are performed against the Caches.

Based on the types of the Caches configured, we can have two types of caches, Static and Dynamic.

The Integration Service performs differently based on the blazon of lookup cache that is configured. The following table compares Lookup transformations with an uncached lookup, a static cache, and a dynamic cache:

Persistent Cache

By default, the Lookup caches are deleted postal service successful completion of the corresponding sessions only, we tin configure to preserve the caches, to reuse it adjacent time.

Shared Cache

Nosotros tin can share the lookup cache between multiple transformations. Nosotros tin share an unnamed enshroud between transformations in the aforementioned mapping. We can share a named cache between transformations in the aforementioned or unlike mappings.

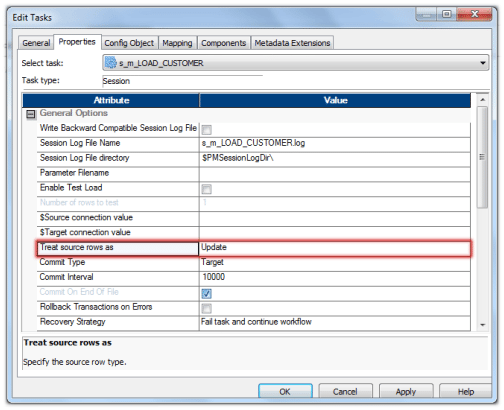

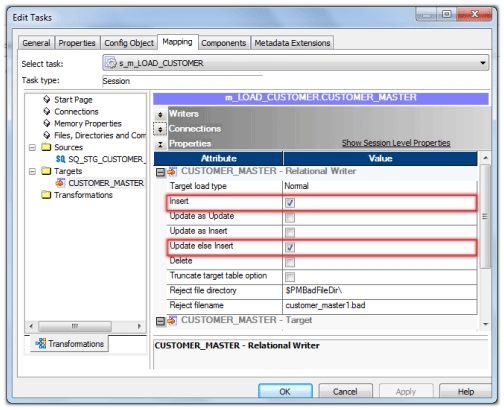

eight. How do you update the records with or without using Update Strategy?

We can employ the session configurations to update the records. We can accept several options for treatment database operations such every bit insert, update, delete.

During session configuration, y'all tin select a single database performance for all rows using the Care for Source Rows As setting from the 'Properties' tab of the session.

- Insert: – Treat all rows every bit inserts.

- Delete: – Treat all rows equally deletes.

- Update: – Treat all rows as updates.

- Data Driven :- Integration Service follows instructions coded into Update Strategy flag rows for insert, delete, update, or decline.

In one case determined how to treat all rows in the session, nosotros can also set up options for private rows, which gives boosted control over how each rows behaves. We need to define these options in the Transformations view on mapping tab of the session backdrop.

- Insert: – Select this option to insert a row into a target table.

- Delete: – Select this pick to delete a row from a table.

- Update :- You have the following options in this state of affairs:

- Update as Update: – Update each row flagged for update if it exists in the target tabular array.

- Update as Insert: – Insert each row flagged for update.

- Update else Insert: – Update the row if information technology exists. Otherwise, insert it.

- Truncate Tabular array: – Select this option to truncate the target table earlier loading information.

Steps:

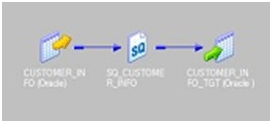

- Blueprint the mapping merely like an 'INSERT' only mapping, without Lookup, Update Strategy Transformation.

- First gear up Treat Source Rows As property every bit shown in beneath epitome.

- Next, set the properties for the target table as shown below. Cull the properties Insert and Update else Insert.

These options will make the session as Update and Insert records without using Update Strategy in Target Table.

When we need to update a huge tabular array with few records and less inserts, we can use this solution to improve the session operation.

The solutions for such situations is not to use Lookup Transformation and Update Strategy to insert and update records.

The Lookup Transformation may non perform better as the lookup tabular array size increases and it as well degrades the functioning.

9. Why update strategy and marriage transformations are Active? Explain with examples.

- The Update Strategy changes the row types. Information technology can assign the row types based on the expression created to evaluate the rows. Like IIF (ISNULL (CUST_DIM_KEY), DD_INSERT, DD_UPDATE). This expression, changes the row types to Insert for which the CUST_DIM_KEY is NULL and to Update for which the CUST_DIM_KEY is not null.

- The Update Strategy tin can reject the rows. Thereby with proper configuration, we can also filter out some rows. Hence, sometimes, the number of input rows, may not be equal to number of output rows.

Similar IIF (IISNULL (CUST_DIM_KEY), DD_INSERT,

IIF (SRC_CUST_ID! =TGT_CUST_ID), DD_UPDATE, DD_REJECT))

Here nosotros are checking if CUST_DIM_KEY is not goose egg and then if SRC_CUST_ID is equal to the TGT_CUST_ID. If they are equal, so nosotros practice non take any action on those rows; they are getting rejected.

Matrimony Transformation

In spousal relationship transformation, though the full number of rows passing into the Union is the same equally the total number of rows passing out of information technology, the positions of the rows are not preserved, i.e. row number ane from input stream 1 might not be row number 1 in the output stream. Marriage does not even guarantee that the output is repeatable. Hence information technology is an Active Transformation.

10. How do you load but null records into target? Explain through mapping catamenia.

Permit the states say, this is our source

| Cust_id | Cust_name | Cust_amount | Cust_Place | Cust_zip |

| 101 | Advert | 160 | KL | 700098 |

| 102 | BG | 170 | KJ | 560078 |

| NULL | Zip | 180 | KH | 780098 |

The target construction is also the aforementioned just, we have got ii tables, one which volition comprise the NULL records and one which volition incorporate non Goose egg records.

We can design the mapping as mentioned below.

SQ –> EXP –> RTR –> TGT_NULL/TGT_NOT_NULL

EXP – Expression transformation create an output port

O_FLAG= IIF ( (ISNULL(cust_id) OR ISNULL(cust_name) OR ISNULL(cust_amount) OR ISNULL(cust _place) OR ISNULL(cust_zip)), 'Aught','NNULL')

** Assuming you lot need to redirect in example any of value is null

OR

O_FLAG= IIF ( (ISNULL(cust_name) AND ISNULL(cust_no) AND ISNULL(cust_amount) AND ISNULL(cust _place) AND ISNULL(cust_zip)), 'Nil','NNULL')

** Assuming you need to redirect in case all of value is nothing

RTR – Router transformation two groups

Group one continued to TGT_NULL ( Expression O_FLAG='NULL')

Group two connected to TGT_NOT_NULL ( Expression O_FLAG='NNULL')

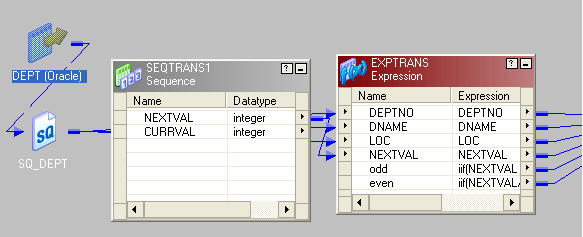

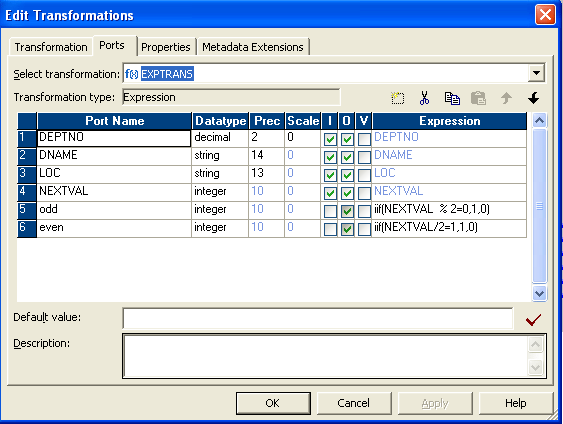

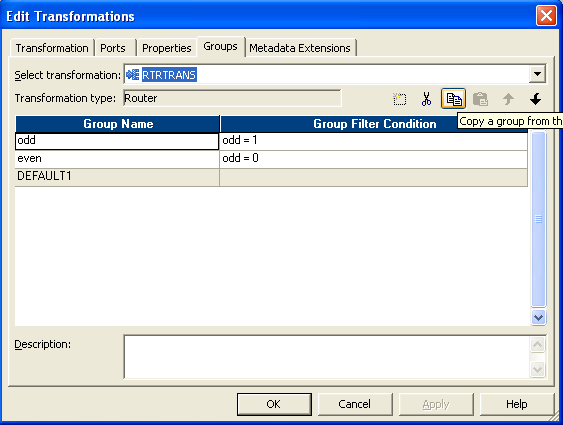

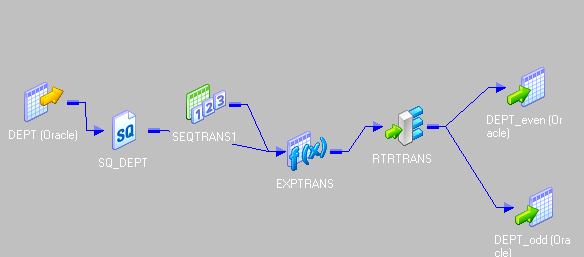

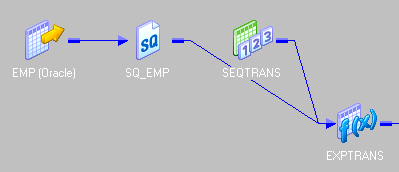

11. How exercise you load alternate records into different tables through mapping flow?

The thought is to add a sequence number to the records and and then dissever the record number by 2. If it is divisible, then move it to 1 target and if not and then move information technology to other target.

- Drag the source and connect to an expression transformation.

- Add together the next value of a sequence generator to expression transformation.

- In expression transformation make ii port, one is "odd" and another "even".

- Write the expression as below

- Connect a router transformation to expression.

- Brand two group in router.

- Give condition as below

- Then send the two group to dissimilar targets. This is the entire menstruation.

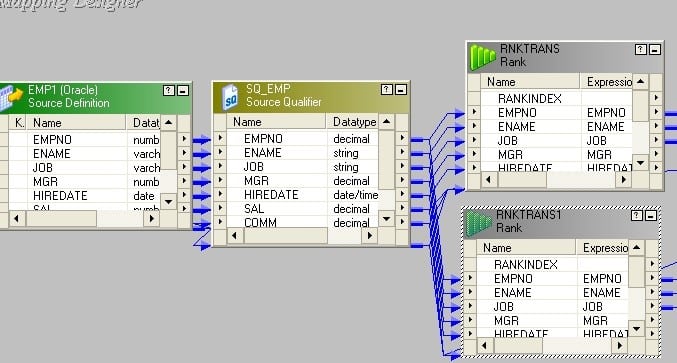

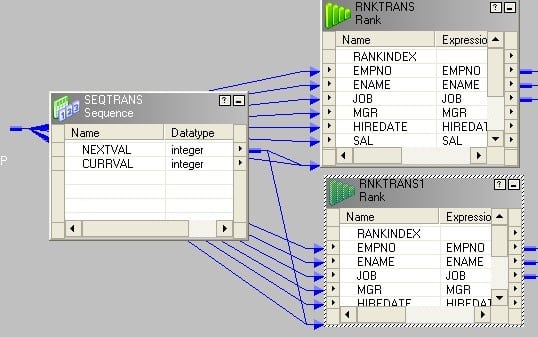

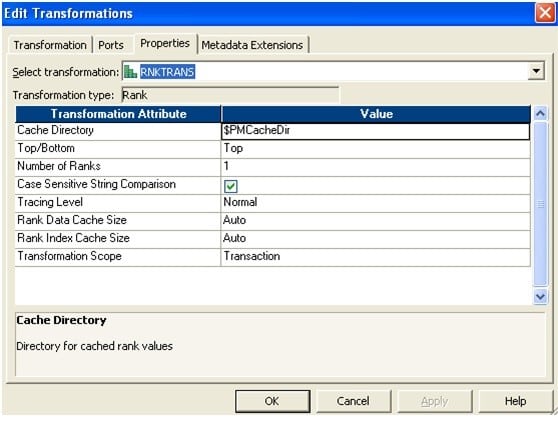

12. How do you load first and last records into target table? How many ways are there to exercise it? Explain through mapping flows.

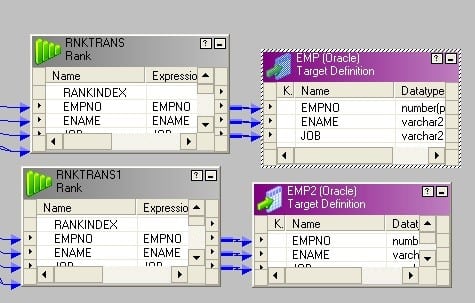

The idea behind this is to add a sequence number to the records and so take the Peak one rank and Bottom one Rank from the records.

- Drag and drop ports from source qualifier to two rank transformations.

- Create a reusable sequence generator having first value 1 and connect the next value to both rank transformations.

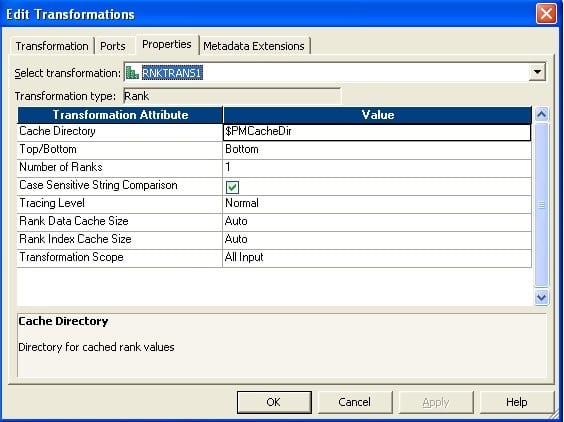

- Fix rank properties as follows. The newly added sequence port should exist called as Rank Port. No need to select any port as Group past Port.Rank – ane

- Rank – 2

- Brand two instances of the target.

Connect the output port to target.

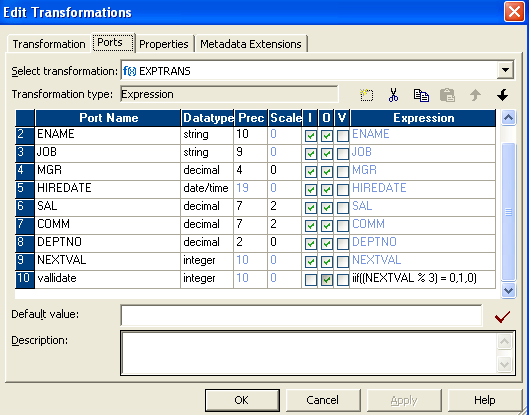

13. I have 100 records in source table, but I want to load 1, 5,10,15,20…..100 into target tabular array. How tin can I practice this? Explicate in detailed mapping menstruum.

This is applicative for any n= 2, 3,4,5,6… For our example, n = 5. Nosotros can employ the same logic for any n.

The idea backside this is to add a sequence number to the records and divide the sequence number past north (for this case, it is 5). If completely divisible, i.e. no residue, then transport them to one target else, send them to the other one.

- Connect an expression transformation after source qualifier.

- Add the next value port of sequence generator to expression transformation.

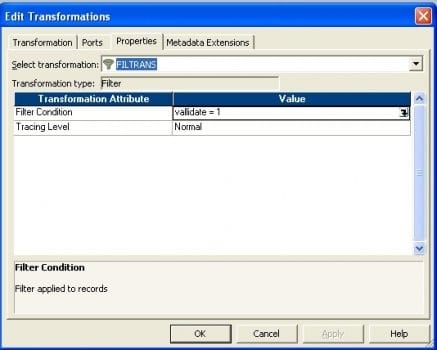

- In expression create a new port (validate) and write the expression as in the picture show beneath.

- Connect a filter transformation to expression and write the status in property every bit given in the movie below.

- Finally connect to target.

14. How do you load unique records into one target table and duplicate records into a dissimilar target tabular array?

Source Tabular array:

| COL1 | COL2 | COL3 |

| a | b | c |

| x | y | z |

| a | b | c |

| r | f | u |

| a | b | c |

| 5 | f | r |

| v | f | r |

Target Table 1: Table containing all the unique rows

| COL1 | COL2 | COL3 |

| a | b | c |

| x | y | z |

| r | f | u |

| v | f | r |

Target Table two: Table containing all the indistinguishable rows

| COL1 | COL2 | COL3 |

| a | b | c |

| a | b | c |

| v | f | r |

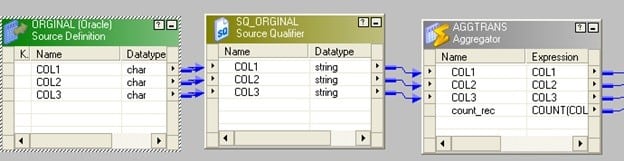

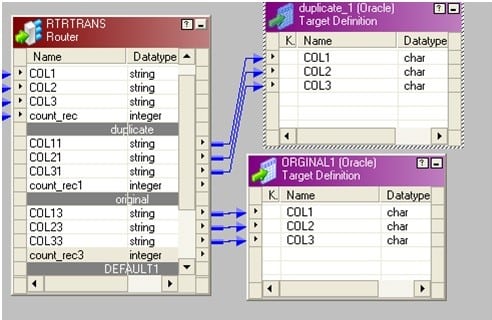

- Drag the source to mapping and connect it to an aggregator transformation.

- In aggregator transformation, group by the fundamental column and add a new port. Call it count_rec to count the key cavalcade.

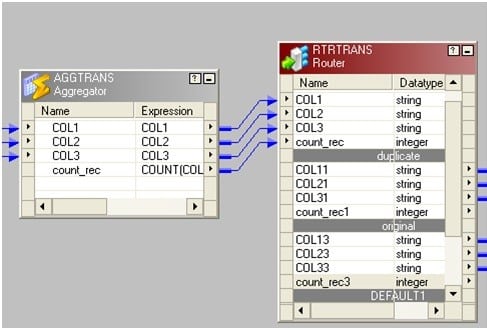

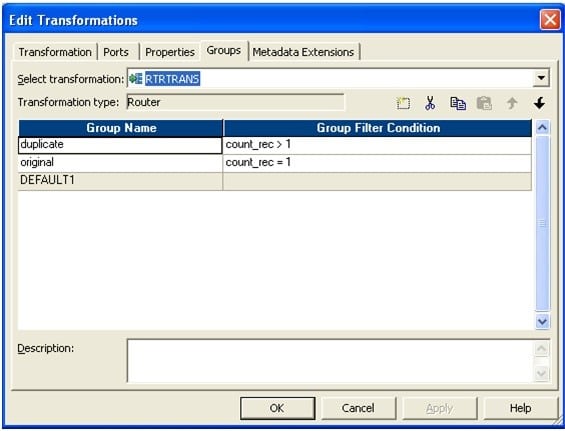

- Connect a router to the aggregator from the previous stride. In router make 2 groups: one named "original" and another as "indistinguishable".

In original write count_rec=1 and in indistinguishable write count_rec>1.

The picture below depicts the group name and the filter conditions.

The picture below depicts the group name and the filter conditions.

Connect 2 groups to corresponding target tables.

Connect 2 groups to corresponding target tables.

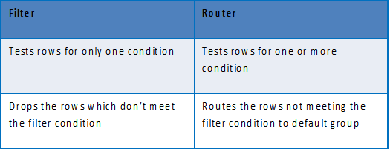

15. Differentiate between Router and Filter Transformation?

16. I have ii dissimilar source construction tables, just I desire to load into single target table? How do I go about information technology? Explicate in detail through mapping menses.

- We can utilise joiner, if we want to join the data sources. Use a joiner and use the matching column to join the tables.

- We can also use a Wedlock transformation, if the tables have some common columns and nosotros need to join the data vertically. Create one union transformation add the matching ports form the 2 sources, to two different input groups and ship the output group to the target.

The basic idea here is to employ, either Joiner or Union transformation, to motility the data from two sources to a single target. Based on the requirement, we may decide, which one should be used.

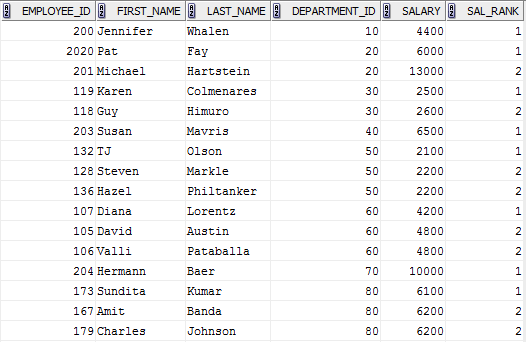

17. How do you load more 1 Max Sal in each Department through Informatica or write sql query in oracle?

SQL query:

Y'all can use this kind of query to fetch more than 1 Max salary for each department.

SELECT * FROM (

SELECT EMPLOYEE_ID, FIRST_NAME, LAST_NAME, DEPARTMENT_ID, SALARY, RANK () OVER (Sectionalization BY DEPARTMENT_ID ORDER By Bacon) SAL_RANK FROM EMPLOYEES)

WHERE SAL_RANK <= 2

Informatica Approach:

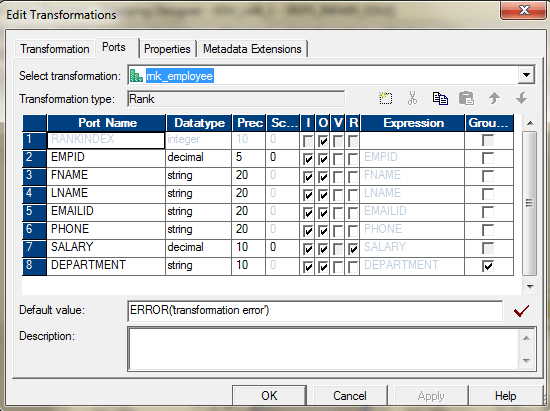

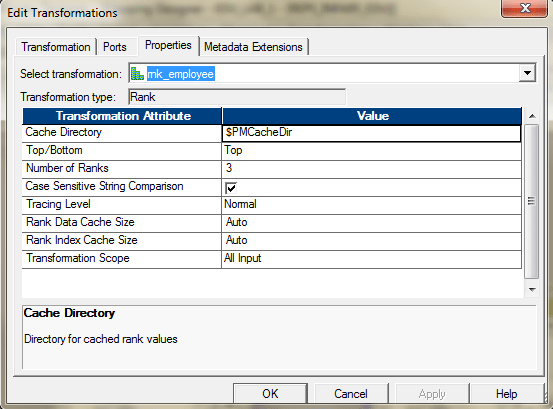

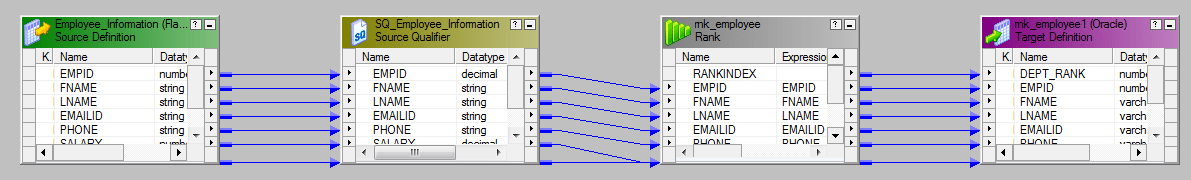

Nosotros can use the Rank transformation to achieve this.

Use Department_ID as the grouping key.

In the properties tab, select Elevation, three.

The entire mapping should await like this.

This will give usa the meridian 3 employees earning maximum bacon in their corresponding departments.

18. How exercise you lot convert single row from source into iii rows into target?

We tin can apply Normalizer transformation for this. If we do not want to employ Normalizer, then there is one alternate style for this.

We take a source tabular array containing three columns: Col1, Col2 and Col3. In that location is simply 1 row in the tabular array as follows:

There is target table contains simply 1 column Col. Design a mapping and then that the target tabular array contains iii rows as follows:

- Create three expression transformations exp_1,exp_2 and exp_3 with one port each.

- Connect col1 from Source Qualifier to port in exp_1.

- Connect col2 from Source Qualifier to port in exp_2.

- Connect col3 from source qualifier to port in exp_3.

- Make 3 instances of the target. Connect port from exp_1 to target_1.

- Connect port from exp_2 to target_2 and connect port from exp_3 to target_3.

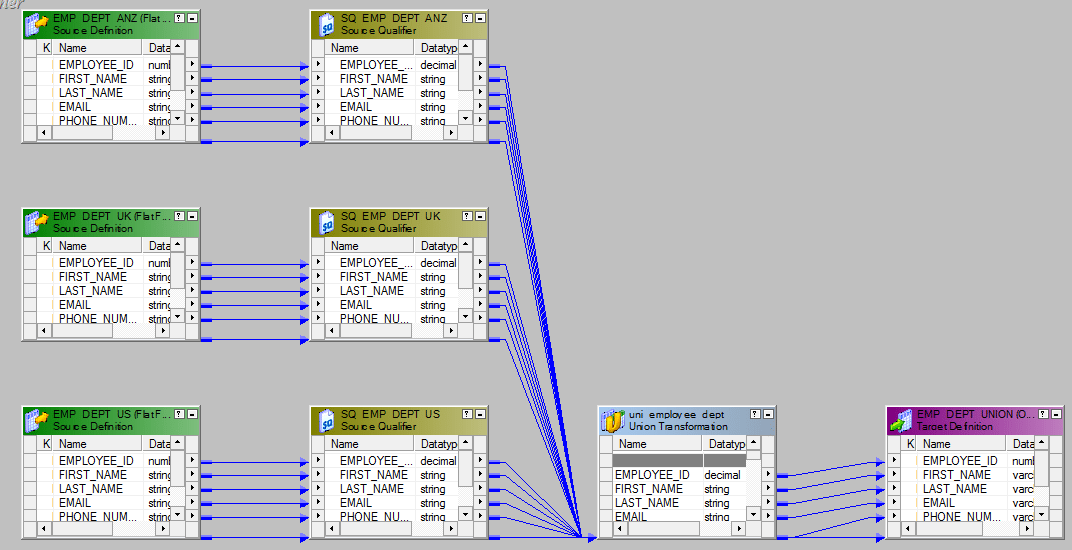

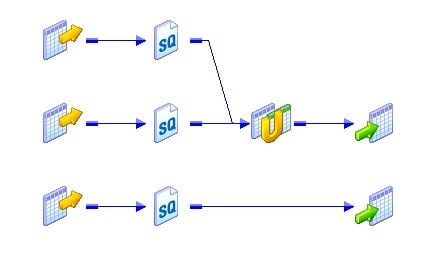

19. I have three aforementioned source structure tables. Only, I want to load into single target table. How do I do this? Explain in particular through mapping flow.

Nosotros will have to utilise the Union Transformation here. Union Transformation is a multiple input group transformation and it has only one output group.

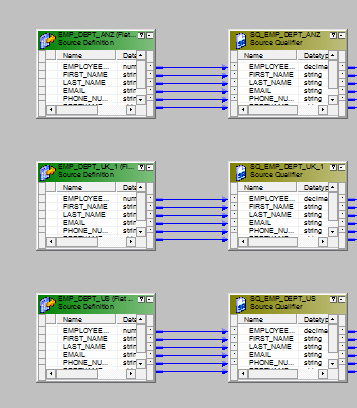

- Drag all the sources in to the mapping designer.

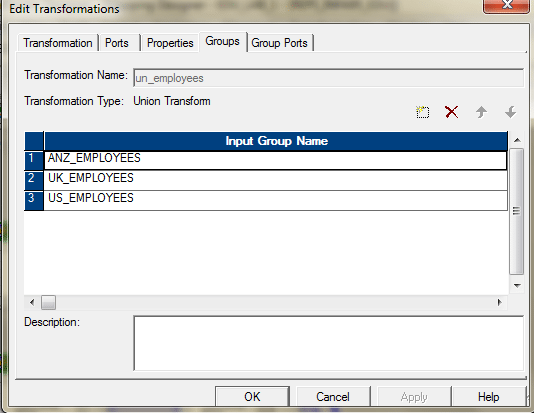

- Add together i union transformation and configure information technology as follows.Group Tab.

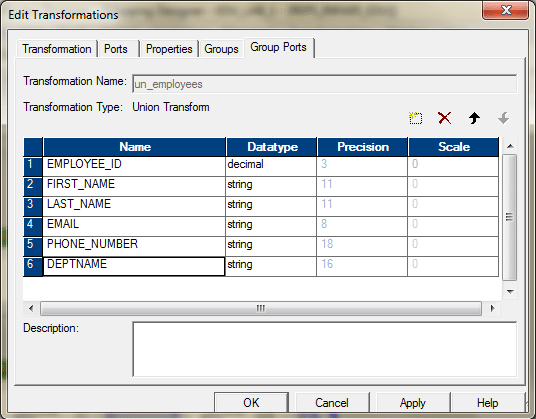

Grouping Ports Tab.

Grouping Ports Tab.

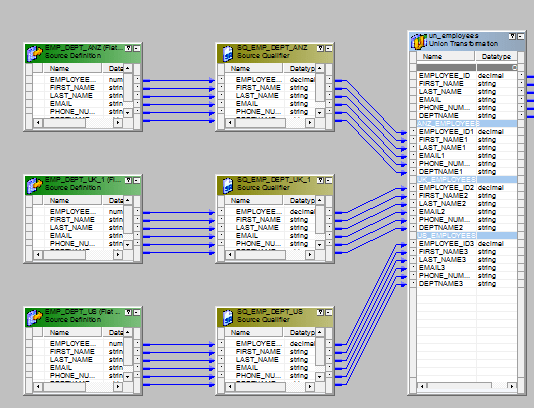

- Connect the sources with the three input groups of the wedlock transformation.

- Ship the output to the target or via a expression transformation to the target.The entire mapping should expect like this.

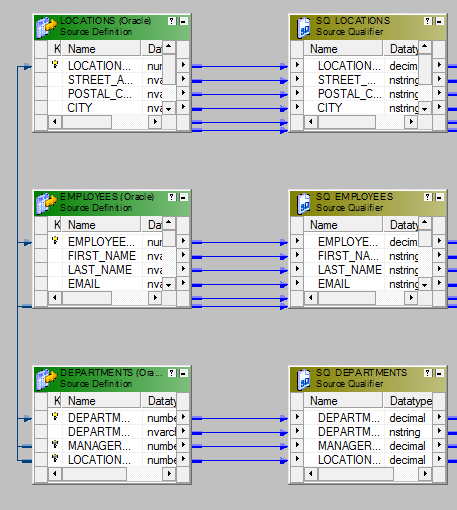

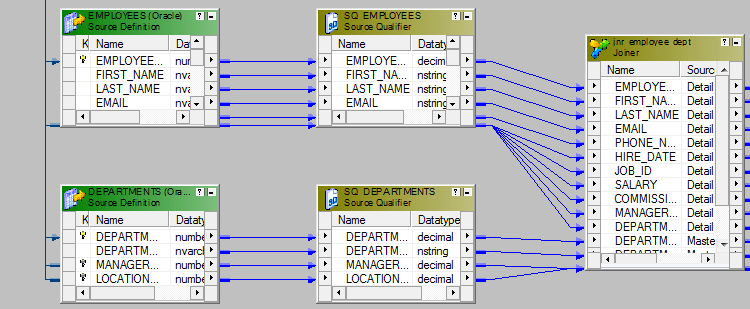

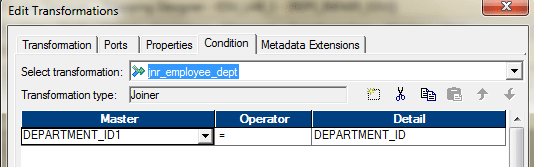

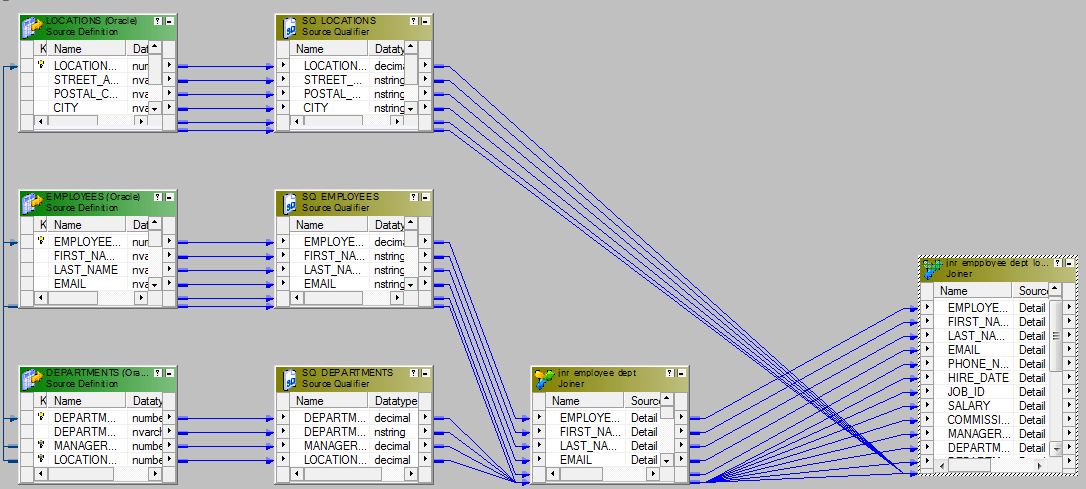

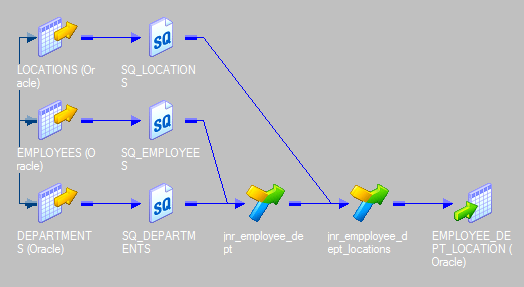

20. How to join three sources using joiner? Explain though mapping menses.

We cannot join more than ii sources using a single joiner. To bring together three sources, we need to take two joiner transformations.

Let's say, we want to bring together three tables – Employees, Departments and Locations – using Joiner. We will need two joiners. Joiner-1 will join, Employees and Departments and Joiner-two will bring together, the output from the Joiner-ane and Locations table.

Here are the steps.

- Bring iii sources into the mapping designer.

- Create the Joiner -1 to join Employees and Departments using Department_ID.

- Create the adjacent joiner, Joiner-2. Take the Output from Joiner-1 and ports from Locations Table and bring them to Joiner-2. Join these ii data sources using Location_ID.

- The last step is to ship the required ports from the Joiner-2 to the target or via an expression transformation to the target tabular array.

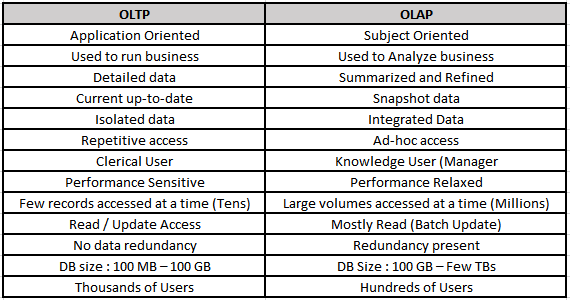

21. What are the differences between OLTP and OLAP?

22. What are the types of Schemas we have in data warehouse and what are the difference between them?

There are 3 different data models that exist.

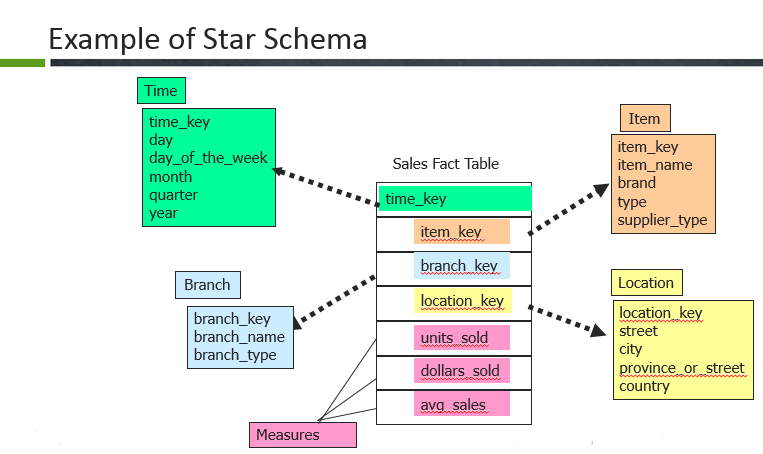

- Star schema

Here, the Sales fact table is a fact table and the surrogate keys of each dimension table are referred here through foreign keys. Case: time key, particular key, branch central, location key. The fact table is surrounded past the dimension tables such every bit Branch, Location, Time and item. In the fact table there are dimension keys such as time_key, item_key, branch_key and location_keys and measures are untis_sold, dollars sold and average sales.Ordinarily, fact table consists of more rows compared to dimensions considering it contains all the master keys of the dimension forth with its own measures.

Here, the Sales fact table is a fact table and the surrogate keys of each dimension table are referred here through foreign keys. Case: time key, particular key, branch central, location key. The fact table is surrounded past the dimension tables such every bit Branch, Location, Time and item. In the fact table there are dimension keys such as time_key, item_key, branch_key and location_keys and measures are untis_sold, dollars sold and average sales.Ordinarily, fact table consists of more rows compared to dimensions considering it contains all the master keys of the dimension forth with its own measures. - Snowflake schema

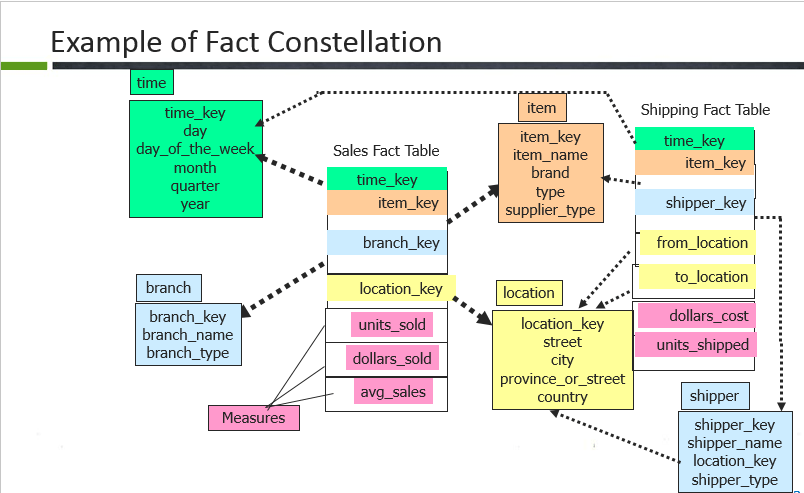

In snowflake, the fact table is surrounded past dimension tables and the dimension tables are also normalized to form the hierarchy. And then in this example, the dimension tables such every bit location, item are normalized further into smaller dimensions forming a hierarchy. - Fact constellations

In fact constellation, there are many fact tables sharing the same dimension tables. This examples illustrates a fact constellation in which the fact tables sales and shipping are sharing the dimension tables time, branch, item.

23. What is Dimensional Tabular array? Explain the different dimensions.

Dimension tabular array is the ane that describes business entities of an enterprise, represented every bit hierarchical, categorical data such as fourth dimension, departments, locations, products etc.

Types of dimensions in data warehouse

A dimension table consists of the attributes about the facts. Dimensions shop the textual descriptions of the business organisation. Without the dimensions, nosotros cannot mensurate the facts. The dissimilar types of dimension tables are explained in item below.

- Conformed Dimension:

Conformed dimensions mean the exact same thing with every possible fact tabular array to which they are joined.

Eg: The appointment dimension tabular array connected to the sales facts is identical to the appointment dimension connected to the inventory facts. - Junk Dimension:

A junk dimension is a collection of random transactional codes flags and/or text attributes that are unrelated to whatsoever particular dimension. The junk dimension is simply a structure that provides a convenient place to store the junk attributes.

Eg: Presume that we have a gender dimension and marital condition dimension. In the fact tabular array we need to maintain two keys referring to these dimensions. Instead of that create a junk dimension which has all the combinations of gender and marital status (cantankerous join gender and marital condition table and create a junk table). At present we can maintain only 1 fundamental in the fact table. - Degenerated Dimension:

A degenerate dimension is a dimension which is derived from the fact table and doesn't have its own dimension table.

Eg: A transactional code in a fact tabular array. - Role-playing dimension:

Dimensions which are often used for multiple purposes within the same database are called role-playing dimensions. For example, a appointment dimension can exist used for "appointment of sale", as well equally "engagement of delivery", or "date of hire".

24. What is Fact Table? Explain the dissimilar kinds of Facts.

The centralized table in the star schema is chosen the Fact table. A Fact table typically contains two types of columns. Columns which contains the measure called facts and columns, which are foreign keys to the dimension tables. The Main key of the fact table is usually the blended primal that is made up of the foreign keys of the dimension tables.

Types of Facts in Data Warehouse

A fact table is the one which consists of the measurements, metrics or facts of business process. These measurable facts are used to know the business organization value and to forecast the futurity business concern. The different types of facts are explained in particular below.

- Additive:

Additive facts are facts that tin be summed upward through all of the dimensions in the fact tabular array. A sales fact is a expert example for additive fact. - Semi-Additive:

Semi-additive facts are facts that can be summed up for some of the dimensions in the fact table, but not the others.

Eg: Daily balances fact tin be summed upwardly through the customers dimension but not through the fourth dimension dimension. - Non-Additive:

Not-additive facts are facts that cannot be summed up for any of the dimensions present in the fact table.

Eg: Facts which have percentages, ratios calculated.

Factless Fact Table:

In the real earth, information technology is possible to take a fact table that contains no measures or facts. These tables are called "Factless Fact tables".

E.g: A fact table which has but production primal and date fundamental is a factless fact. There are no measures in this tabular array. Just however you tin get the number products sold over a period of time.

A fact table that contains aggregated facts are often called summary tables.

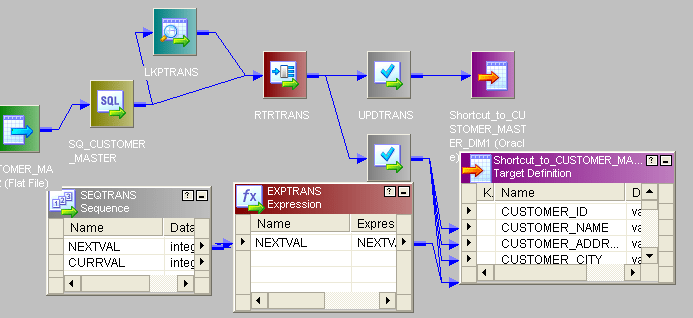

25. Explain in particular about SCD TYPE 1 through mapping.

SCD Type1 Mapping

The SCD Type 1 methodology overwrites old information with new information, and therefore does not need to track historical data.

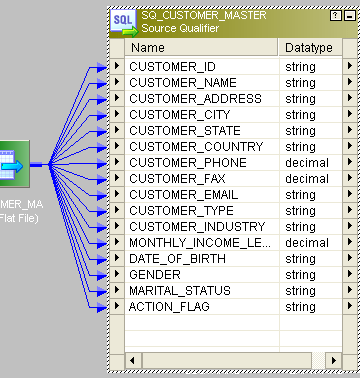

- Hither is the source.

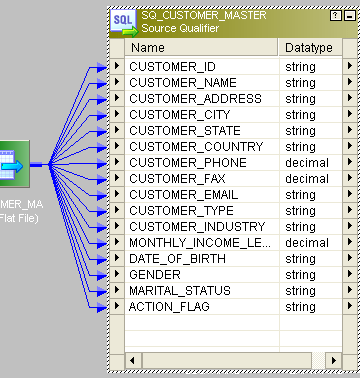

- We will compare the historical data based on key column CUSTOMER_ID.

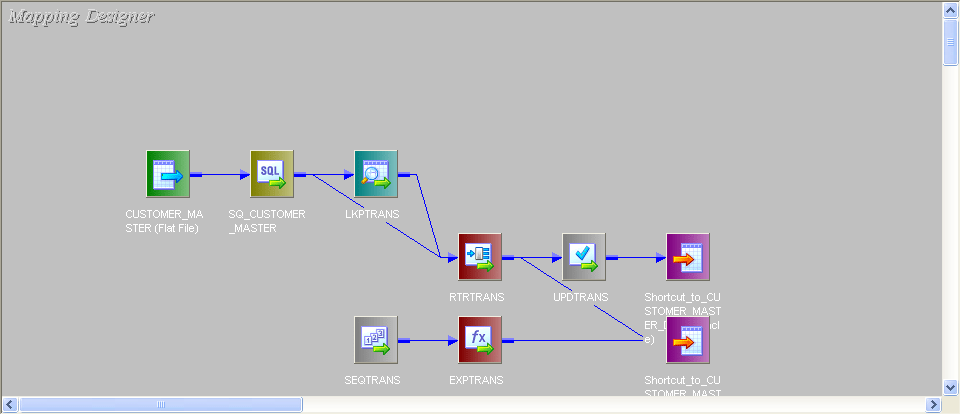

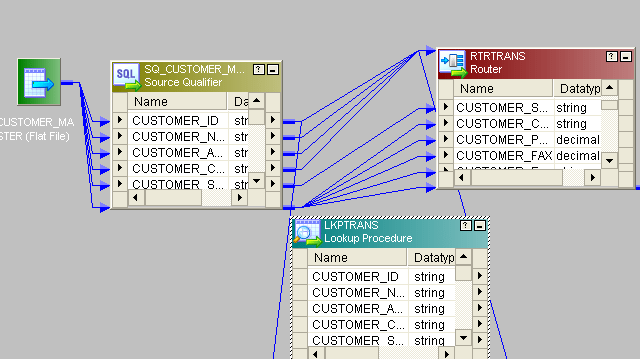

- This is the entire mapping:

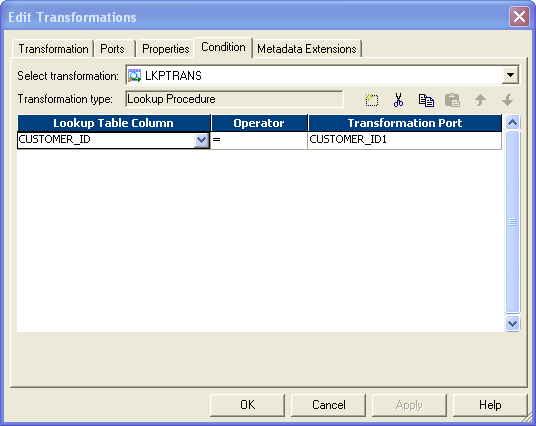

- Connect lookup to source. In Lookup fetch the data from target table and transport only CUSTOMER_ID port from source to lookup.

- Give the lookup status like this:

- Then, transport residue of the columns from source to 1 router transformation.

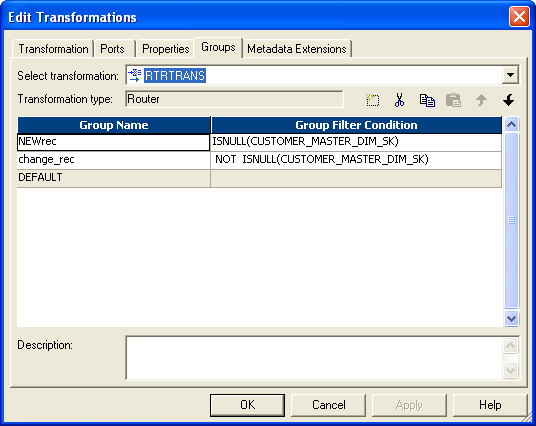

- In router create two groups and give condition like this:

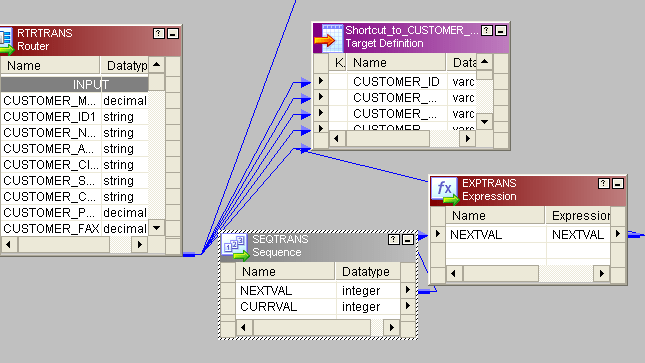

- For new records we have to generate new customer_id. For that, have a sequence generator and connect the next column to expression. New_rec group from router connect to target1 (Bring two instances of target to mapping, one for new rec and other for onetime rec). So connect next_val from expression to customer_id column of target.

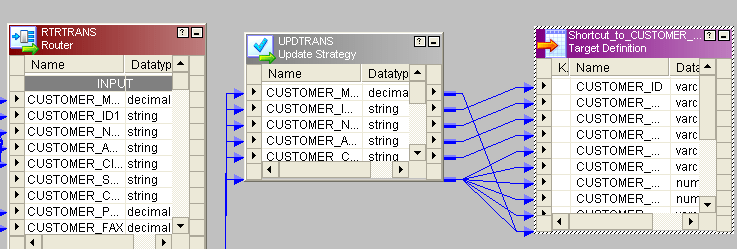

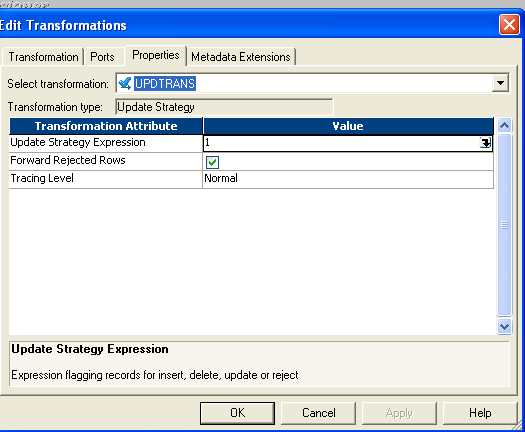

- Change_rec group of router bring to one update strategy and give the status like this:

- Instead of 1 you lot can give dd_update in update-strategy so connect to target.

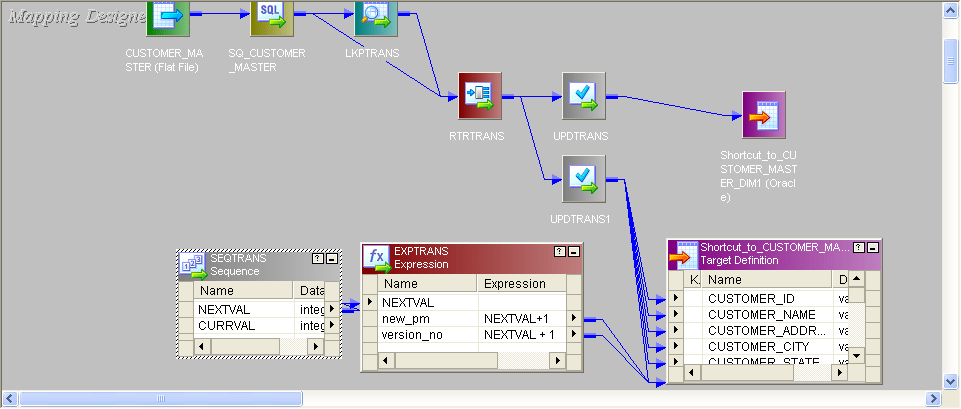

26. Explicate in particular SCD TYPE 2 through mapping.

SCD Type2 Mapping

In Type ii Slowly Changing Dimension, if i new record is added to the existing table with a new information and then, both the original and the new record will exist presented having new records with its own primary key.

- To identifying new_rec we should and i new_pm and 1 vesion_no.

- This is the source:

- This is the entire mapping:

- All the procedures are similar to SCD TYPE1 mapping. The Only difference is, from router new_rec will come to one update_strategy and condition will be given dd_insert and one new_pm and version_no will be added earlier sending to target.

- Old_rec also volition come to update_strategy condition will give dd_insert then will send to target.

27. Explain SCD TYPE iii through mapping.

SCD Type3 Mapping

In SCD Type3, there should exist ii columns added to identifying a single attribute. It stores one time historical data with electric current data.

- This is the source:

- This is the entire mapping:

- Up to router transformation, all the procedure is same equally described in SCD type1.

- The only difference is subsequently router, bring the new_rec to router and give condition dd_insert send to.

- Create 1 new principal primal send to target. For old_rec transport to update_strategy and set condition dd_insert and send to target.

- You lot can create ane effective_date cavalcade in old_rec table

28. Differentiate betwixt Reusable Transformation and Mapplet.

Any Informatica Transformation created in the Transformation Programmer or a non-reusable promoted to reusable transformation from the mapping designer which can exist used in multiple mappings is known every bit Reusable Transformation.

When we add a reusable transformation to a mapping, we actually add an instance of the transformation. Since the instance of a reusable transformation is a pointer to that transformation, when nosotros alter the transformation in the Transformation Programmer, its instances reflect these changes.

A Mapplet is a reusable object created in the Mapplet Designer which contains a fix of transformations and lets u.s. reuse the transformation logic in multiple mappings.

A Mapplet can comprise as many transformations every bit we demand. Like a reusable transformation when we use a mapplet in a mapping, we use an instance of the mapplet and any change fabricated to the mapplet is inherited by all instances of the mapplet.

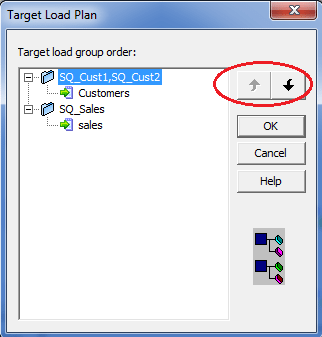

29. What is meant by Target load programme?

Target Load Lodge:

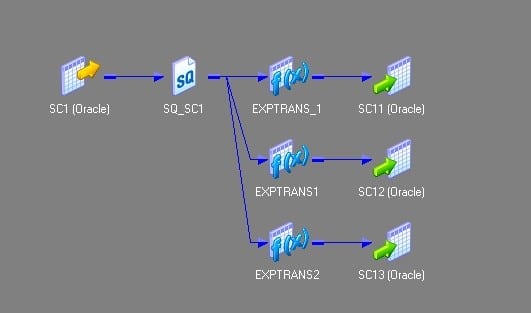

Target load society (or) Target load program is used to specify the order in which the integration service loads the targets. Y'all tin can specify a target load society based on the source qualifier transformations in a mapping. If you have multiple source qualifier transformations connected to multiple targets, you can specify the order in which the integration service loads the data into the targets.

Target Load Lodge Group:

A target load order grouping is the collection of source qualifiers, transformations and targets linked in a mapping. The integration service reads the target load gild group concurrently and it processes the target load gild group sequentially. The following figure shows the two target load order groups in a unmarried mapping.

Use of Target Load Order:

Target load order will be useful when the data of 1 target depends on the data of another target. For example, the employees table data depends on the departments data because of the main-key and foreign-central relationship. So, the departments tabular array should be loaded get-go so the employees table. Target load order is useful when you desire to maintain referential integrity when inserting, deleting or updating tables that have the primary key and foreign key constraints.

Target Load Order Setting:

Y'all tin set the target load order or plan in the mapping designer. Follow the below steps to configure the target load order:

1. Login to the PowerCenter designer and create a mapping that contains multiple target load order groups.

two. Click on the Mappings in the toolbar and so on Target Load Plan. The post-obit dialog box volition pop upward listing all the source qualifier transformations in the mapping and the targets that receive data from each source qualifier.

- Select a source qualifier from the list.

- Click the Up and Down buttons to movement the source qualifier within the load order.

- Repeat steps iii and iv for other source qualifiers y'all desire to reorder.

- Click OK.

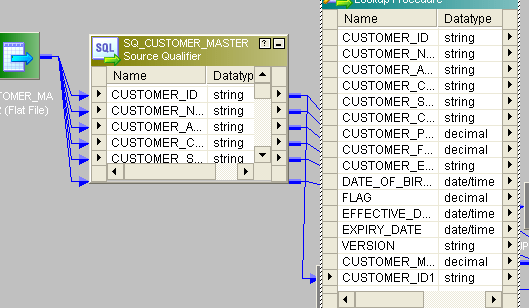

thirty. Write the Unconnected lookup syntax and how to return more i column.

We can only return i port from the Unconnected Lookup transformation. Every bit the Unconnected lookup is chosen from another transformation, we cannot return multiple columns using Unconnected Lookup transformation.

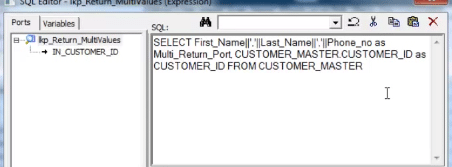

All the same, in that location is a trick. We can use the SQL override and concatenate the multiple columns, those we need to return. When nosotros tin can the lookup from another transformation, we need to divide the columns again using substring.

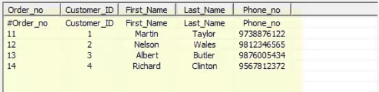

As a scenario, nosotros are taking one source, containing the Customer_id and Order_id columns.

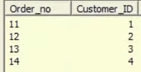

Source:

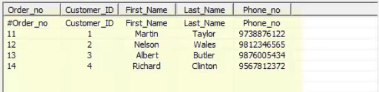

We need to await up the Customer_master table, which holds the Customer information, like Name, Phone etc.

The target should expect like this:

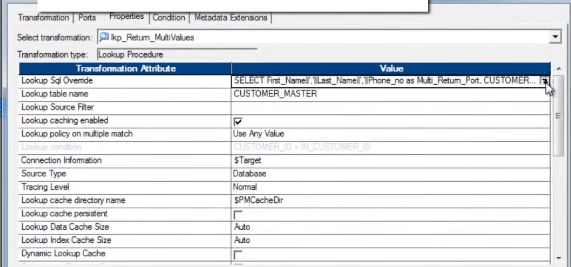

Permit'southward take a wait at the Unconnected Lookup.

The SQL Override, with concatenated port/column:

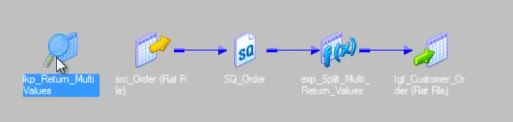

Entire mapping will look like this.

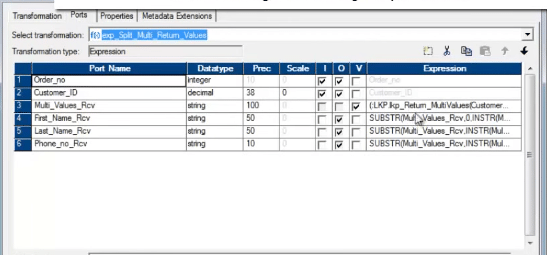

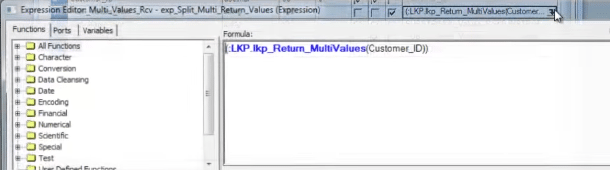

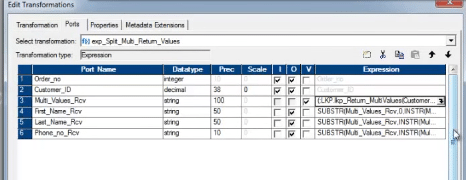

We are calling the unconnected lookup from one expression transformation.

Beneath is the screen shot of the expression transformation.

Afterward execution of the to a higher place mapping, below is the target, that is populated.

I am pretty confident that subsequently going through both these Informatica Interview Questions blog, y'all volition be fully prepared to have Informatica Interview without any hiccups. If yous wish to deep dive into Informatica with use cases, I will recommend you to get through our website and enrol at the earliest.

The Edureka form on Informatica helps you lot master ETL and data mining using Informatica PowerCenter Designer. The course aims to raise your career path in data mining through alive projects and interactive tutorials past manufacture experts.

Got a question for us? Delight mention information technology in the comments department and we will get back to y'all.

Related Posts:

Get Started with Informatica PowerCenter 9.Ten Developer & Admin

Informatica Interview Questions for Beginners

Career Progression with Informatica: All Yous Need to Know

Source: https://www.edureka.co/blog/interview-questions/informatica-interview-questions/

0 Response to "when we use huge flat file on lookup, what ye of cache we have to use"

แสดงความคิดเห็น